I would recommend looking at the scripting API for SkinnedMeshRenderer as well as Mesh since those hold BlendShape information. The best bet is to establish your needs and then determine the tools that fit best.

Personally I have used both as well as written my own tools for specific use cases.

#Use facerig for facial animation pro

SALSA and LipSync Pro are both very good, but you have to look at the feature sets to see what works best for you. When it comes to timing and randomizing expressions and lip sync, there are several tools available on the assetstore for good prices. I had pretty good results.I'd say that the MotionBuilder pipeline would be superior since the resulting animations are editable outside of Unity. I haven't used the FacePlus MotionBuilder plugin they offer, but have used the FacePlus Unity plug-in that is now unsuppprted. When it comes to building a work flow for animation, Mixamo is a nice place to start. If you need details on outside tools then you will have to provide some more details on what you are trying to accomplish and hopefully someone like can chime with some advice. Personally, I am not an animator, so further details on what is a good workflow would be best addressed by people much more qualified. If the character has a blendshape (also called morph targets) driven facial rig, these can be manipulated directly using properties of the SkinnedMeshRenderer component.Įither works fine in Unity depending on what your workflow is. However, unless the bones are named exactly the same in every character they cannot be retargeted.Ģ. If the character has a bone driven facial rig, you can keep additional bones and have those driven by animations. As far as doing facial animation with a Mecanim compatible character, there are two things that can be done:ġ. It can be used in video calls, or to make videos, or for broadcasts, and much more.

#Use facerig for facial animation mac

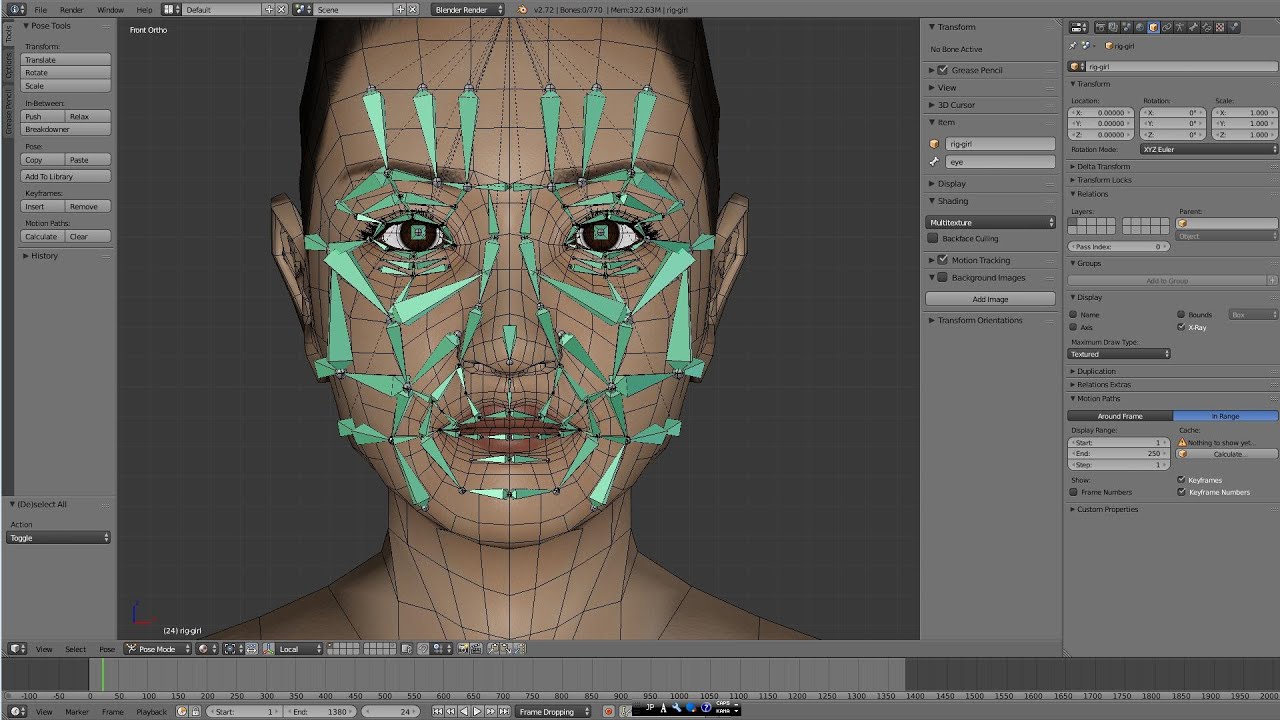

Snap Camera is a program that can be used on both Windows and Mac and allows us to modify our webcam and add effects and filters in virtual reality. The rig must be created in an outside program (3DS Max, Maya, Blender, etc) and then it is mapped to a humanoid avatar structure for Unity to use. It is not a facial motion capture software. You don't actually "rig" anything in Unity.

0 kommentar(er)

0 kommentar(er)